In the article “Death to Good Graphics,” I argued that the industry needed to spend less money on graphics and focus more on gameplay and content. Each graphics generation makes game space cost more to produce, which means the same budget makes for a smaller, shallower game. Add in the fact that AAA titles frequently blow a lot of their budget on lavishly produced cutscenes, and we wind up with less game for our gaming dollars. At the time I was afraid of a future where a lot of AAA games would become two-hour technology demos with little replay value. It turns out that didn’t really happen, although that has as much to do with hardware as it does with the game design.

It’s been almost decade since I wrote that article, so I think now is a good time to circle back and see how things have changed. A couple of weeks ago I said that the PC is a strange platform. As it turns out, the last decade has been a strange period of time even by PC standards. I’m going to try to describe what’s been going on, but keep in mind that everything I’m about to say has been simplified quite a bit.

Moore’s law states that every year and a half, computers should get twice as fast. This idea of constantly rising clock speeds would be more accurately called Moore’s Prediction, but the prediction was so accurate for so long that we treated it as a law.

Processor Speeds

It’s actually a little more complicated than rising clock speeds, but “twice as fast every 18 months” is close enough to the truth to work as a rule of thumb. That rule took hold in the 1960s and remained true for the next 40 years, but that growth finally came to an end in the new millenium. Processor speeds were around 2.6 GHz in 2009. If Moore’s law had continued as before, then today’s processors would be running at an absurd 170 GHz. Instead, speeds are somewhere around 3.5 GHz and they’ve been stuck there for years. It’s possible to make machines faster than this, but doing so requires expensive cooling solutions. Even the most advanced cooling system won’t get you anywhere near 170. The current world record is only just over 8.

Clock speeds have basically stopped going up, but engineers have managed to nudge performance forward a bit by adding more cores. We can’t really make a processor much faster, but we can put more of them into a single machine. Imagine a horse-drawn wagon. You might be able to get it to go faster by breeding stronger, healthier, faster horses, but eventually you’ll hit the upper limit of what a horse is capable of. Once you hit the point where you’ve got the Captain America of horses pulling your wagon, the only way to gain more speed is to add more horses. The problem is that twice as many horses doesn’t mean you’ll got twice as fast, and the gains drop off sharply.

The trick here is that additional cores only help with performance if the machine is doing multiple jobs that can be performed in parallel, and if the original programmer designed their software to take advantage of them. This means it’s tough to give an absolute answer about how much faster today’s computers are in comparison to the machines of 2009. Depending on what the machine is doing it can be anywhere from barely improved to massively faster.

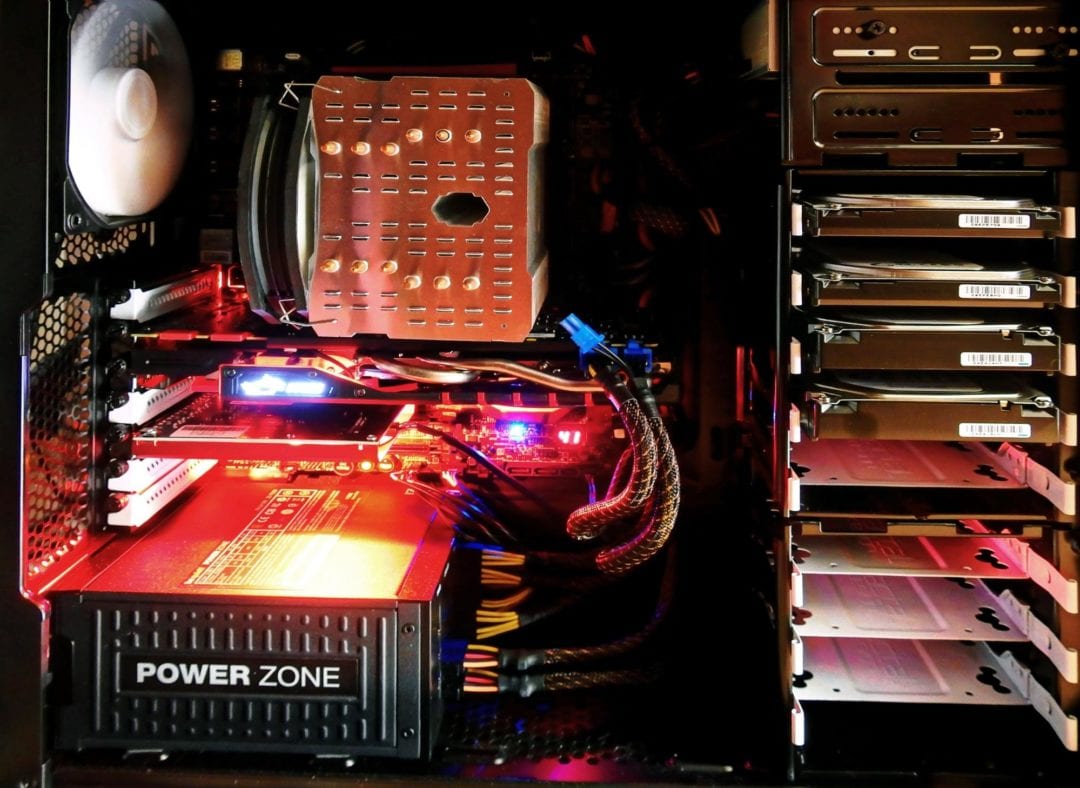

Graphics Hardware

The graphics processing front is even more complicated. It’s really difficult to properly compare the graphics hardware of 2009 with the graphics hardware of today. A modern card is made up of thousands of processing cores. These aren’t generalized processors like the CPU in your PC. Instead they’re much smaller and focused on a simpler task. However, putting 10 times as many cores on a card doesn’t automatically make it 10 times faster. There’s still the challenge of breaking up a rendering task to keep all of those cores busy. Certainly newer cards are faster, but figuring out how much faster is a tricky question. A 2009 card might not have the required features to run a 2018 game, and a 2009 game probably won’t be able to maximize the use of a 2018 card. (I’m basing this on benchmarking results. I don’t understand the sorcery performed at the driver layer so I’m not sure how effectively an older game can saturate the cores on a newer card.)

For fun, I used this site to measure a GeForce GTS 250 against a RTX 2080 Ti, which are graphics cards from 2009 and 2018, respectively. According to this extremely informal measure, the newer device is 25 to 32 times faster than the older one. Moore’s law says that you’d expect processing power to increase 64-fold after nine years, which means that graphics cards are nearly keeping up with those projections. That’s pretty impressive, considering how processing clock speeds have leveled off.

From this you might think that your average PC has more than 30 times the graphical horsepower of a 2009 machine. Sadly, it’s not that simple. Over the last few years the PC gaming scene has been shaken by the intrusion of cryptocurrency mining. I’m sure you’ve heard of Bitcoin and blockchain technology. Strange as it may seem, the cryptocurrency craze made a complete mess of PC gaming for the last few years.

The Invasion of Internet Money

The trick with cryptocurrencies is that they’re entirely digital with no central bank or government to regulate them so they need some way to prevent fraudsters from magically printing more digital money. They do this by creating a system where everyone is constantly checking up on everyone else’s transactions to make sure they’re legitimate. The more digital bean counters there are, the more secure the system is overall. If you lend your computer to a cryptocurrency network for validating transactions, then sometimes the system will pay you a bit of money. The more processing power you pour into the system, the more often you’ll get paid. You’ve heard of Bitcoin mining? That’s what this is.

The problem is that this created a powerful incentive for people to build machines with lots of processing power. Like I said above, graphics cards have thousands of cores. In terms of processing cycles per dollar, they were the best deal around. It was an obvious move to take all of that processing power and put it to work on cryptocurrency mining. The result was that cryptominers began buying up graphics cards in bundles and stuffing them into specialized rigs. You’d end up with a machine that had six graphics cards that never did any rendering at all.

The miners bought up all the best graphics cards. When those ran out, they bought up the second-best cards. Then brand new cards came out, and they bought those too. Prices went through the roof. Normally a graphics card starts out expensive and gets cheaper over time, but this crypto-mining business meant that prices instead kept going up. The card manufacturers could not make them fast enough to keep up with demand. For a while, you could take the price of a current-generation card and use that money to buy an Xbox One S, a PlayStation 4, and a Nintendo Switch, and you’d still have money left over.

This meant that even though graphics cards were making great strides in terms of performance, that power wasn’t making it into the hands of the masses. From the standpoint of a developer, the PC was basically stalled for several years. Sure, a few high-end enthusiasts could afford new hardware, but that group wasn’t large enough to support a AAA title. If you wanted to release a game on the PC, you needed to aim for the aging hardware that dominated the market.

Making matters worse was that all of this was going on just as VR, 60 fps gaming, and 4K displays were taking off. The PC suddenly had new things to spend graphics power on and lots of people couldn’t take advantage of it.

The cryptomining bubble seems to have popped and prices are falling for the first time in years. I predict that once the Christmas season is over, we’ll finally be back to some sort of normalcy and prices will return to pre-cryptocurrency levels.

Wrapping Up

CPU speeds haven’t changed much. The same is true for most other hardware. Memory, hard drive speeds, and internet bandwidth have been incrementally improved without any major revolutions disrupting the industry. The only place where any serious gains were made was with graphics processing, and cryptocurrencies stalled the rollout of those improvements and prevented them from becoming mainstream.

Overall, I think this was a good thing. For the first time ever, you can have a five-year-old PC and still be reasonably confident that you’ll be able to buy a new AAA game and run it on the default settings. This probably explains why the PC is doing so well lately. According to this Global Games Market Report, the PC market is about as big as all of the consoles combined. That’s a major reversal from 2009, when the PC was viewed as less important to publishers than the Xbox, PlayStation, and Wii. The technology stagnation meant it was easier for people to enjoy PC gaming without needing the expense and hassle of biennial upgrades.

This coincided with a very long console generation for the PlayStation 3 and Xbox 360. While the spike in hardware prices was annoying for consumers, it was probably a boon for developers. I’m sure they enjoyed being able to crank out many games with the same engine rather than needing to throw their engine away and start over every four years.

Like I said, it’s been a strange decade. Next week I’ll look at the way games have changed (or not) and see what developers have been doing with this power.

Published: Nov 27, 2018 10:11 am